Actively Avoid Insights: 4 Useful KPI Measurement Techniques

- Fahad H

- Feb 18, 2009

- 10 min read

Yes. I noticed the slightest hint of sarcasm in the title of this post.

This post covers four commonly used measurement techniques that 9 times out of 10 work against the evolution of Reporting Squirrels into Analysis Ninjas.

I'll also admit that most of the times when I encounter them I might think slightly less of you (especially if you present the aggregate version to me rather, presenting the segmented view atleast gets you time to explain :)).

If I am being slightly tough minded here it is only because I am hugely upset by the fact that analytics on the web is deeply under leveraged, though the good lord knows we try and pump out KPI's by the minute.

One root cause of this under leveraging it our dashboards that are crammed full of metrics that use these four measurement techniques. The end results: Data pukeing and not insights revelation.

So who are the four amigos?

Each a technique that when used "as normal" actively hinder your ability to communicate effectively the insights that your data contains.

Only one caveat: I am not saying these techniques are evil. What I am saying is don't be "default" when using them, be smart (or don't).

Before we get going here's my definition of what a Key Performance Indicator is:

Measures that help you understand how you are doing against your objectives.

Note the stress on Measures. And Objectives. It it doesn't meet Both criteria its not a KPI.

With that out of the way lets understand why Averages, Percentages, Ratios and Compound Metrics are four usually disappointing measurement techniques.

Raise your hand if you are average? Ok just Ray? No one else?

Raise your had if your visit on any website reflects an average visit? Just you Kristen?

No one is "average" and no user experience is "average". But Averages are everywhere because: 1) well they are everywhere, which feeds the cycle and 2) they are an easy way to aggregate (roll up) information so that others can see it more easily.

Sadly seeing it more easily does not mean we actually understand and can identify insights.

Take a look at the number above.

51 seconds.

Ok you know something.

Now what?

Are you any wiser? Do you know any better what to do next? Any brilliant insights?

No.

It is likely that the Average Time on Site number for your website has been essentially unchanged for a year (and yet, yes sirrie bob, it is still on your "Global Senior Website Management Health Dashboard"!).

Averages have an astonishing capacity to give your "average" data, they have a great capacity to lie, and they hinder decision making. [You are going to disagree, quite ok, please share feedback via comments.]

What can you do?

I have two recommendations for you to consider.

Uno. Segment the data.

Identify your most important / interesting segments for your business and report those along with the Overall averages.

You have more context. Social Media boo! Paid Search booer! Organic yea! Email yea! Etc Etc Etc. : )

While this is not the most optimal outcome, it will at the very minimum give your Decision Makers context within which to ask questions, to think more clearly (and mostly wonderfully ignore the overall average number).

So on your dashboards and email reports make sure that the Key Performance Indicators that use Averages as the measurement technique are shown segmented. It will prod questions. A good thing, as Martha would say.

Dos. Distributions baby, distributions!

If averages often (*not always*) stink then distributions rock.

They are a wonderful way to dissect what makes up the average and look at the numbers in a much more manageable way.

Here's how I like looking at time on site. . . .

So delightful.

I can understand the short visits (most!) and decide what to do (ignore 'em, focus hard core, etc).

I can see there is deep loyalty, about 30%, I can decide what these people like, what they don't like, where they come from, what else I can do. [Would you have imagined from the Average Time on Site that you have fanatics on your site who are spending more than 10% on each visit!!]

I can try to take care of the midriff, what is up with that any way.

See what I mean? The difference between the two: Reporting Squirrel vs. Analysis Ninja!

Nothing, really nothing, is perhaps more ubiquitous in our world of Web Analytics than percentages.

You can't take a step without bumping into one.

Some percentages are ok, but very very rarely are they good at answering the "so what" or the "now what" questions.

The problem with percentages is that they gloss over what's really important and also tend to oversell or under sell the opportunity.

Let's compare two pictures. In the first one we just report conversion rates, see what you can understand in terms of insights fro this one. . . . .

Now try to answer the question: So What?

Any answers?

Yes some conversions are lower and others are higher? Anything else? Nope?

Ok try this one. . . .

Better right?

You get context. The raw numbers give you key context around performance.

[Update: I use this plugin to get raw conversion rate numbers into Google Analytics: Better Google Analytics Firefox Extension. I highly recommend it, you get the above and a bunch more really cool stuff. Must have for GA users.]

Also notice another thing, I'll touch on this in a bit as well. If you only report overall conversion rate (as we all do in our dashboards) your use of a percentage KPI is much worse. You get nothing.

By showing the various "segments" of conversions I am actually telling the story much better to the Sr. Management. What's working, what needs work.

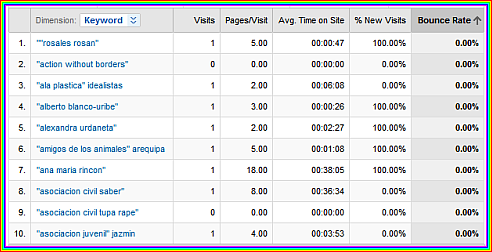

Here's another constant problem with conversion rates. . . .

I am looking at a table of data (in any tool really) and it looks like there's something here.

Ok well I want to fix things. I want to know where I can improve bounce rates, so I sort. . . .

Data yes. Totally useless. I can't possibly waste my time with things that bring one visit.

So I re sort to see if I can find where its totally working for me. . . .

Strike three, again not very useful, just take a peek at the Visits column.

What I really want is not where the percents are high or low. I want to take action.

What I really really want is some way of identifying statistically significant data, where bounce rates are "meaningfully up" or "meaningfully down" so that I can take action confidently.

I can't do that in Google Analytics. Quite sad.

Some other tools like Coremetrics (by default) and WebTrends (in some places by default or with a external "plugin" you can buy from external consultants) will compute a %delta (difference between two numbers) and color it red or green.

That's not what I am taking about.

That is equally useless because that percentage difference make you take action where there is no significance in the two numbers. Don't fall for that.

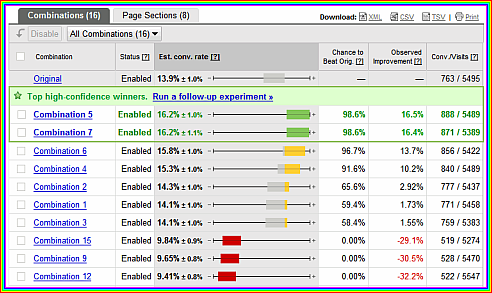

It is truly a crying shame that the Google Analytics does not have something like the Google Website Optimizer does. . . .

. . . . a trigger for me to know when results are statistically significant, and by how much I should jump for joy or how many hairs I need to pull out of my hair in frustration. See those sweet colored bars in the middle? See the second column after that? Minorly orgasmic right?

Isn't it amazing that after 15 years web analytics tools are still not smart, even though they have so much data and computations. Ironic if you think about it.

What can you do?

I have three recommendations for you to consider.

Uno. Segment the data.

Wait, did I not say that already? : )

Do it.

Useless. . . .

Useful. . . .

Show opportunities, show failures, let the questions comes.

Dos. Always show raw numbers.

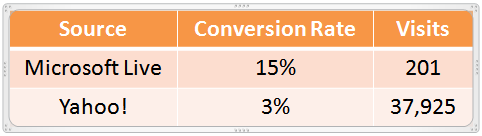

Often conversion rates mask the opportunity available.

Conversion rate from Live is 15% and conversion rates for Yahoo! are 3%.

Misleading.

We all know that Yahoo! has significantly more inventory than Live and even if you had all the money in the world you can't make use of that 15% conversion rate from Live.

Show raw Visits. It will look something like this:

See what difference that would make on a dashboard? No false alarms.

You overcome the limitation of just showing the percentage.

In the example above I am using Visits, because I want to show the HiPPO's where the constraints are (without them having to think, thus earning my Ninja credentials!). But I am most fond of using Outcomes when I pair up raw numbers (Orders, Average Order Value, Distribution of Time, Task Completion Rates, etc etc) because HiPPO's love Outcomes.

Tres. Don't use % delta! User Statistical Significance et al.

When you use percentages it is often very hard to discern what is important, what is attention worthy, what is noise and what is completely insignificant.

Be very aware of it and use sophisticated analysis to identify for your Sr. Management (and yourself!) what is worthy.

Use Statistical Significance, it truly is your BFF!

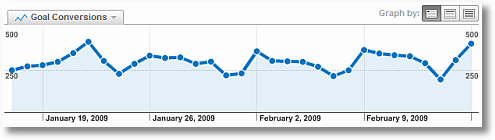

Use Statistical Control Limits, they help you identify when you should jump and when you should stay still (so vital!).

This is all truly sexy cool fun, trust me.

Can I be honest with you? [Ok so I can hear your sarcastic voice saying: "Why stop now?" ;)]

Ratios have a incredible capacity to make you look silly (or even "dumb").

I say that with love.

What's a ratio?

"The relative magnitudes of two quantities (usually expressed as a quotient)." (Wordnetweb, Princeton.)

That was easy. : )

In real life you have see ratio's expressed as 1.4 or as 4:2 or other such variations.

You are comparing two numbers with the desire to provide insights.

So let's say the ratio between new and returning visitors. Or the ratio of friend requests sent on Facebook to friend request received. Or the ratio of articles submitted on a tech support websites to the articles read. Or… make your own.

They abound in our life. But they come with challenges.

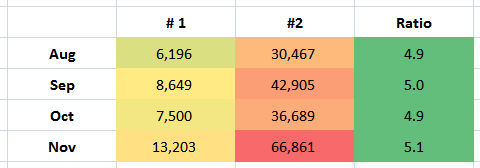

The first challenge to be careful of is that the two underlying numbers could shift dramatically without any impact on your ratio (then you my friend are in a, shall we say, pickle). . . .

I have put my "brilliant" excel skills to demonstrate that point. In your dashboard you'll how the ratio (all "green" for four months). Yet the fundamentals, which is really what your Sr. Management is trying to get at, have changed dramatically, perhaps worth an investigation, yet they'll get overlooked.

I hear you protesting all the way from Spain, "aw come one, you have got to be kidding me!". I kid you not.

Think of all the effort you have put into automating the dashboard and cramming all the data into it. Ahh… you've stuffed it with percentages and ratios to make it fit. And you've automated it to boot.

Casualty? Insights. Actionability.

The second problem with ratios is a nuance on the above. It is perhaps more insidious. It occurs when you compare two campaigns or sources or people or other such uniquely valuable things.

I see it manifested by a HiPPO / Consultant / Vendor Serviceman foisting upon you that 1.2 is a "good ratio".

Then you start measuring and people start gaming the system. Because you see 12/10 gets you that ratio as does 12,000/10,000. Yet they both get "rated" the same and that as you'll agree is dumb.

What can you do?

I have two recommendations for you to consider.

Uno. Resist just showing the ratio.

Throw in a raw number, throw in some other type of context and you are on your way to sharing something that will highlight a important facet, prod good questions.

Enough said.

Dos. Resist the temptation to set "golden" rules of thumb.

This is very hard to pull off, we all want to take the easy way out.

But doing this will mean you'll incent the wrong behavior, hinder any thought about what's actually good or bad.

You can a ratio as a KPI, but incent the underlying thing of value. For example Reach and not the ratio of Visits to Subscribers (!!).

Compound metrics produce an unrecognizable paste after mixing a bunch of, perhaps perfectly good, things.

All kidding aside compound metrics are all around us. Most Government data tends to be compound metrics (is it a wonder that we understand nothing that the government does?).

A compound metric is a metric whose sub components are other metrics (or it is defined in terms of other computations).

Here's an example:

(% of New Visits) times (Average Page Views per Visit) equals, making something up here, Visit Depth Index.

What?

Yes what indeed.

The environments where compound metrics thrive are ones where things are really really hard to measure (so we react by adding and multiplying lots of things) or when confidence in our ability to drive action overtakes reality.

Honestly no matter what the outcome is here (or how much of a "god's gift to humanity" it is) how can you possibly do anything with this:

Website Awesomeness= (RT*G)+(T/Q)+((z^x)-(a/k)*100)

(If you don't know what those alphabets stand for just make something up.)

Compound metrics might be important, after all the Government users them, but they have two corrosive problems:

1) When you spit a number out, say 9 or 58 or 1346, no one,except you has any idea what it means (so a huge anti actionability bias) and worse

2) You have no way of knowing if it is good or bad or if you should do something. You can easily see how a raise in some numbers and fall in others could cause nothing to happen. Or all hell could break loose and yet you still get 9. Or 58. Or 1346.

What can you do?

I have three recommendations for you to consider.

Uno. Take them with a grain of salt (or a truck full of salt).

Really.

Regardless of if it comes from me or President Obama or [insert the name of your favorite religious deity here].

Stress test how you'll overcome the two challenges above. If your compound metric passes those tests you are all set.

Dos. Degrade to key "critical few" components.

Grinding RT and G and T and Q and z and x and a and k into a mush is the problem. Not RT or G or T or Q or z or x or a or k themselves.

Spend some time with your HiPPO's and Marketers and people who pay your salary. Try to understand what is the business really trying to solve for. Put the nose to the grind stone and so some hard work.

At the end of this process, as you decompose the individual components, what you'll realize is that all you need is RT and Q and G. Report them.

No not as a weird married "couple". As individuals.

Everyone will know what you are doing, you help the business and your dashboard focus, drive action.

Tres. Revisit and revalidate.

If you must use compound metrics please revisit them from time to time to see if they are adding value. Also check that they are adding value in all the applicable scenarios

If you are using weights, as many compound metrics tend to do, then please please stress test to ensure the weights are relevant to you. Also revalidate the weights over time to ensure you don't have to compensate for seasonality or other important business nuances.

End of story.

I'll close There are two schools of thought about Analtyics.

One is that math is easy so let's go add, subtract, multiply and divide because calculators, computers and data are easily available.

This is the "Reporting Squirrel" mental model, data above all else.

The other is that your entire existence is geared towards driving action. So think, stress test, be smart about the math you do. Computers and calculators are cheap but it does not excuse doing the things outlined above.

This is the "Analysis Ninja" mental model, insights above all else.

Good luck!

Ok now its your turn. What do You think of these four measurement techniques? Agree with my point of view? Why? Why not? Care to share your own bruises from the wonderful world of Web Analytics Key Performance Indicators? Got questions?

Please share your feedback. Thank you.

PS: Couple other related posts you might find interesting:

Comments