A marketer’s guide to Facebook’s fake news problem and what is being done to fix it

- Fahad H

- Jan 11, 2017

- 12 min read

In late December, the defense minister of Pakistan tweeted an ominous reminder to the leaders of Israel that Pakistan “is a Nuclear state too.”

This tweet was in response to a news story in which former Israeli Defense Minister Moshe Yaalon was quoted as saying, “If Pakistan send ground troops into Syria on any pretext, we will destroy this country with a nuclear attack.” The Pakistan Minister of Defense saw the quote and determined it was a threat to their country.

The problem? This threat from Israel was not real; it was reported on a fake news site. Yaalon never said it. There was no quote. Just made up words.

Pakistan’s Minister of Defense, Khawaja Muhammad Asif, reacting to a false story from a fake news site. (The original tweet has since been deleted.)

So how did we get to this point? A point where leaders of countries are threatening each other because of news that is not merely inaccurate, but utterly fabricated?

Fake news in the internet era

Fake news is not new. It has been around for hundreds of years under various names such as “yellow journalism” and “tabloid news.” Online, it has been around almost as long as we have had a public internet, especially in the areas of health and medicine.

Go on Facebook, and at least once a day you will see a post about “melting away belly fat” and “cures for cancer.” These are not legitimate articles based on scientific research and peer-reviewed medical journals. These are simply “articles” that publishers use to lure visitors to their sites. Why? These sites make money from ad revenue. Lots of money.

Yet, however prevalent these fake news stories, none have been as pervasive (and potentially persuasive) as the fake news surrounding the 2016 US presidential election.

Fake news has become a highly problematic issue, and there are now demands for Google and Facebook to find solutions.

Fake news and the election

Since the election, there has been a lot of press and debate about the role of fake news in the election process — not only the role of fake news, but the the role of Facebook and its part in disseminating these posts. Fake news (in this case, propaganda) posts disseminated by Russia alone were viewed over 213 million times, according to a non-profit research group PropOrNot.

In the days immediately following the election, Facebook initially denied that there was an issue. In an interview at the Techonomy conference on November 10, CEO Mark Zuckerberg stated:

“Personally, I think the idea that fake news on Facebook — which is a very small amount of the content — influenced the election in any way… I think is a pretty crazy idea. Voters make decisions based on their lived experience.”

However, in the two months since the election, this direction has seemingly changed. Facebook no longer denies the issue of fake news and has implemented measures to combat it, hopefully diminishing its effects.

This change of direction is likely not a moment too soon, as Germany’s government recently announced that it is proposing legislation to fine any social media site that disseminates fake news.

“If, after appropriate examination, Facebook does not delete the offending message within 24 hours, it should expect individual fines of up to 500,000 euros,” [parliamentary chair of the Social Democratic Party Thomas] Oppermann said. The subject of a fake news story would be able to demand a correction published with similar prominence, he added.

What exactly is fake news?

Since the first reports about fake news following this election, there has been a lot of misunderstanding about the issue. I now regularly see Facebook comments declaring “FAKE NEWS!” when there is a reporting error in a mainstream media news article, or when the person posting simply disagrees with the content.

So we first must understand what fake news is and what it is not. Then understand what Facebook intends to do about it and why it matters to us.

What is news?

News sites generally fall under one of the following three informal classifications:

Mainstream media/”real” news

Biased/partisan news

Fake/propaganda news

1. Mainstream media or “real” news

Larger-scale news publications, often referred to as the “mainstream media,” are defined by having trained journalists who strive to be neutral or unbiased in their coverage. They also generally follow a code of ethics, have fact checkers who check their stories for errors, and employ editors who put their stamp of approval on a story before it gets published.

Of course, errors can and do still happen, but journalistic standards and internal processes are in place to minimize the chances for those mistakes and errors to occur.

Additionally, opinion pieces are clearly labeled as such, making them easily distinguishable from pure news stories.

Why is all this important? Trust between the publication and the reader is the hallmark of a “real” news site.

If The New York Times were to report in error, it would not be with the intent to deceive. This means if someone pointed out that error to the Times, they would internally review the claim for mistakes. If they did indeed make an error, the error would be corrected, and that change would be added in an Editor’s note on the article page for other readers to view.

Real consequences exist for real news publishers

The consequences for false or “sloppy” reporting can be harsh in mainstream media, and failure to adhere to journalistic standards can cost even the most reputable journalists their careers.

For instance, Dan Rather was a highly respected journalist at CBS, but he stepped down from his 24-year tenure as CBS Nightly News’s anchorman following a controversial news segment about former President George W. Bush’s National Guard service that failed to meet the network’s internal standards for journalistic integrity. The network also ousted four employees, including three executives, and apologized to its viewers for not properly vetting their sources.

Due to the potential for reputation-damaging consequences, mainstream media outlets generally cannot be found guilty of willfully disseminating false information. Of course, this does not mean they never get it wrong. Bad reporting certainly exists, and indeed, some very well-known publications have been tripped up by fake news reports themselves.

What distinguishes these publications from true “fake news,” however, is that there is intent to be objective and intent to get it right — and when they don’t, they move to correct the error quickly.

What about smaller publications?

This “real” news classification is not limited to large publications; smaller niche publications — such as the one you are reading now — can also be defined as real news because they, too, follow journalistic standards.

For example, every article I produce for this site is reviewed, fact-checked, and sent to the editor for final approval. If I make a mistake or do not source a fact properly, it is sent back to me for changes until the editor is satisfied and willing to “put their name on it,” so to speak.

So, what about sites with clear bias? Are these fake news?

2. Biased or partisan sites

There is no agreed-on or official classification for this type of site, so for now we will just call them biased or, when political, “partisan.” While it is easy to tell a biased site from a “real” news site (just the use of highly charged and hyperbolic language typically gives them away), they are often difficult to suss out from “fake news.”

A discerning difference between biased news sites and fake ones is that, while the writing on a biased site is generally ideologically driven, it is still (at least loosely) based in fact. The main editorial divergence from “real” news often comes from the way a biased site contextualizes the news they report; they use rhetorical devices or cherry-picked data to persuade people to think about issues in a specific way.

Now, while these sites will often present themselves as being on a par with “real” news outlets, there is often little to no editorial control and no external code of ethics that article authors subscribe to. You will also typically not find much, if any, writing on the site that contradicts the site’s agenda. There is also little to no attempt to be neutral or unbiased in their writing.

Unlike “real” news sites, which present many and differing views, biased sites will generally share the same viewpoints across all content. This does not mean all biased sites are bad; some advocate very good causes. However, they fail to meet the qualifications of a real news site in terms of diversity and editorial control and cannot be classified as such.

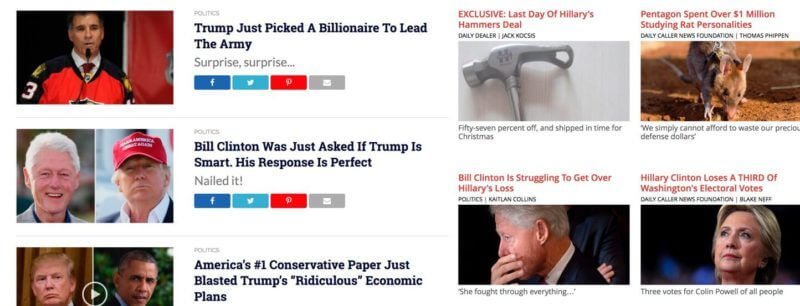

Examples of biased or partisan “news”

Just from these titles, we can see the agendas are quite clear. There is also definitive use of hyperbolic or rhetorical language in the headlines.

Pictured above: Occupy Democrats (left) and The Daily Caller (right)

Note that the presence of some facts does not mean the articles themselves are neutral and objective. Conversely, the presence of deliberately misleading information does mean the site is “fake news.” These sites are created to support an existing bias and must be fact-checked — but unlike fake news sites, they don’t just make it all up.

These sites could be defined best as the ones that support our confirmation biases. We seek them out because they tell us we are right. Their use of (often cherry-picked, skewed and/or misleading) facts is meant to support a specific viewpoint.

Real news is not meant to support our biases; it is there to tell us the story, whether we agree or not.

3. Fake news or propaganda sites

As mentioned, biased/partisan sites can be hard to distinguish from “fake news.” Both site types appear to have similar issues with truth at times. Awarded PolitiFact’s 2016 Lie of the Year Award, “fake news” has its own designation.

Ignoring the facts has long been a staple of political speech. Every day, politicians overstate some statistic, distort their opponents’ positions, or simply tell out-and-out whoppers. Surrogates and pundits spread the spin. Then there’s fake news, the phenomenon that is now sweeping, well, the news. Fake news is made-up stuff, masterfully manipulated to look like credible journalistic reports that are easily spread online to large audiences willing to believe the fictions and spread the word.

Fake news sites are made to look like legitimate news sites. They are not satire (like The Onion); rather, they are sites created to look like news and be shared like news, but are completely made-up or just plain propaganda.

It comes down to facts and rhetoric

Fake news is not about the use of simple rhetoric to frame debates or mold minds, which is the purpose of a biased or partisan publication. Fake news is just plain false — or such an exaggeration of fact as to make the facts meaningless.

Some examples of these fake stories include headlines like, “Pope Francis Shocks World, Endorses Donald Trump for President” and this one, “FBI Agent Suspected in Hillary Email Leaks Found Dead of Apparent Murder-Suicide,” which is not only a fake story but the “Denver Guardian,” where it was posted, is also not real.

Unlike with partisan or biased news sites, the creators of fake news sites often don’t have an agenda other than to enrich themselves. Some of the creators of fake news were even supporters of the candidates their sites maligned. Or they had no political affiliation at all, such as the teenagers in Macedonia who said they just discovered an easy way to make money. Fake news became the new affiliate marketing.

So now that we know what fake news is and isn’t, what does Facebook do about it?

Muddied waters

As mentioned previously, telling the difference between a biased and a fake story (or website) can be difficult, if not almost impossible, without fact-checking and manual review. The only factor that these sites hold in common without fact-checking is that they almost always use hyperbole and inflammatory rhetoric, while real news sites generally do not. However, this is not a measure by which a site can be easily judged.

So, what can Facebook do to fix the system? If they cannot easily classify sites, then what can they do to discern between what is real and what is not? And how do they prevent the widespread dissemination of these stories?

Facebook has a plan.

Facebook’s fake news plan

A few weeks ago, Mark Zuckerberg posted on his Facebook page outlining the steps they were taking in order to combat the spread of fake news on his platform. Here are the main points, quoted directly from his post:

Stronger detection. The most important thing we can do is improve our ability to classify misinformation. This means better technical systems to detect what people will flag as false before they do it themselves.

Easy reporting. Making it much easier for people to report stories as fake will help us catch more misinformation faster.

Third party verification. There are many respected fact checking organizations and, while we have reached out to some, we plan to learn from many more.

Warnings. We are exploring labeling stories that have been flagged as false by third parties or our community, and showing warnings when people read or share them.

Related articles quality. We are raising the bar for stories that appear in related articles under links in News Feed

Disrupting fake news economics. A lot of misinformation is driven by financially motivated spam. We’re looking into disrupting the economics with ads policies like the one we announced earlier this week, and better ad farm detection.

Listening. We will continue to work with journalists and others in the news industry to get their input, in particular, to better understand their fact checking systems and learn from them.

They have now put these ideas to work and have developed a process for handling fake news that is in beta testing. So what is the specific process?

Step 1: Easier user reporting

Users have long been able to report stories shared on Facebook by using the drop-down arrow in the upper right-hand corner of the post. Now, there is a new option where they can report and classify the story as “fake news.”

Step 2: Verification by Poynter’s signatory fact-checkers

If there are enough “flags,” the story will be sent to fact-checkers for verification. These are not random organizations, but third-party fact-checkers that are signatories of Poynter’s International Fact-Checking Network fact-checkers’ code of principles.

The International Fact-Checking Network (IFCN) at Poynter is committed to promoting excellence in fact-checking. We believe nonpartisan and transparent fact-checking can be a powerful instrument of accountability journalism; conversely, unsourced or biased fact-checking can increase distrust in the media and experts while polluting public understanding.

Poytner’s signatories are held to code of ethics and, if found to be in violation, they will be removed from the signatory list (and thus won’t be able to contribute to Facebook’s fact-checking process). It seems this secondary manual review by certified fact-checkers should help promote confidence in the facts themselves. Note that not all signatories listed on the site will be used by Facebook — only a select few. So what if an article is found to be fake news?

Step 3: Article is labeled “Disputed” by third-party fact-checkers

Any article found to be fake will be labeled as “disputed,” with a link to an article that will explain the classification. These articles may also be moved lower in the feed.

These articles can still be shared, but when the user goes to share them, this info box will appear.

IMPORTANT! Once an article is flagged as disputed, it cannot be promoted using Facebook Advertising channels.

Will this be effective?

Possibly. Poynter recently wrote about a new study suggesting that people do, in fact, respond to fact-checked information. The study, “The Elusive Backfire Effect: Mass Attitudes’ Steadfast Factual Adherence,” concludes that “[b]y and large, citizens heed factual information, even when such information challenges their partisan and ideological commitments.”

It seems if users are presented with well-supported facts by media organizations, they are likely to accept them as true.

Facebook’s steps to combat fake news will address user behavior, but what about the publishers and the News Feed itself?

Other action Facebook is taking

Facebook is looking at incorporating signals into their algorithms that will help predetermine a story might be fake news. For example, if actually reading a story makes a user less likely to share it, then chances are high that the article is misleading or does not reflect the claims made in the headline. (That, however, could be inaccurate on topics that are sensitive or inflammatory by nature.)

Additionally, Facebook is removing the ability to spoof domains, which will reduce the number of sites that pretend to be real news publications. On the publisher side, they will be reviewing publisher actions for policy violations to determine if “enforcement” is necessary. This seems to indicate sites may be removed from posting for some time, but they do not state this in the press release.

What this isn’t

Censorship. Facebook is a private company and can censor anything they like, but at this time they are not censoring any publishers. They are not banning articles or pages or preventing them from being shared. If, despite the “disputed” label and share warning that the article is not factual, you still want to share, you are fully able to do so — and your friends on Facebook will be able to see it and share as well.

What Facebook is removing is the ability to advertise a “disputed” article in their advertising platform after that article has been fact-checked. This removes much of the financial incentive, for now. We also know where there is a lot of money, so there will be attempts to work around the system. However, for now, this seems like it could be pretty effective in minimizing the impact of false news stories, both by undermining publishers’ motivation for creating them and limiting their actual reach.

What does this mean for marketers?

Because this label is set at the article level rather than at the site level, Facebook is going to require by the nature of the process that all sites be more circumspect in their adherence to the truth and the standards set forth by the International Fact-Checking Network at Poynter.

If you want your articles shared and available to the advertising platform, then it cannot be written with little regard to the facts. If people report your article, then it needs to pass the manual review — and a poorly written or sourced article with little outside support for the claims therein will not likely pass that test.

While this move will likely be controversial and a developing effort, if Facebook can remove the financial incentives of fake news publishers and interrupt the spread of false stories with the “disputed” label, it will lower the reach of fake news. In this day and age of too much information, removing information that is wrong or deliberately false just seems like a good idea.

Additional Link: For more about how fake news creators worked and why they did it, an interview podcast from NPR.

Comments